Yisong Yue (he/him)

California Institute of Technology

1200 E. California Blvd.

CMS, 305-16

Pasadena, CA 91125

Office: 303 Annenberg

Contact Information >

California Institute of Technology

1200 E. California Blvd.

CMS, 305-16

Pasadena, CA 91125

Office: 303 Annenberg

Contact Information >

About

I am a professor of Computing and Mathematical Sciences at the California Institute of Technology. My research interests lie primarily in machine learning, and span the entire theory-to-application spectrum from foundational advances all the way to deployment in real systems.

I work closely with domain experts to understand the frontier challenges in applied machine learning, distill those challenges into mathematically precise formulations, and develop novel methods to tackle them.

Asari AI: I am a founding advisor for Asari AI, where I help design AI co-inventors (AI agents that can plan, abstract, and verify complex design tasks).

Latitude AI: I am currently a (part-time) Principal Scientist at Latitude AI, where I work on machine learning approaches to behavior modeling and motion planning for autonomous driving.

ICLR 2025: I am serving as the General Chair at ICLR 2025. The Program Chairs are Carl Vondrick (SPC), Rose Yu, Violet Peng, Fei Sha, Animesh Garg.

Current Research

My current research interests can be broadly organized into three overlapping groups:AI for Autonomy: study how AI methods can enable novel capabilities in autonomous systems; characterize and address key technical bottlenecks (e.g., data-driven safety guarantees); deploy in real systems.

Selected Publications

-

Morphological-Symmetry-Equivariant Heterogeneous Graph Neural Network for Robotic Dynamics Learning

Fengze Xie, Sizhe Wei, Yue Song, Yisong Yue, Lu Gan

Conference on Learning for Dynamics and Control (L4DC), 2025

[arxiv] -

Robust Agility via Learned Zero Dynamics Policies

Noel Csomay-Shanklin, William D. Compton, Ivan Dario Jimenez Rodriguez, Eric R. Ambrose, Yisong Yue, Aaron D. Ames

International Conference on Intelligent Robots and Systems (IROS), 2024

[arxiv] - Neural-Fly Enables Rapid Learning for Agile Flight in Strong Winds

Michael O’Connell*, Guanya Shi*, Xichen Shi, Kamyar Azizzadenesheli, Anima Anandkumar, Yisong Yue, Soon-Jo Chung

Science Robotics, May 2022.

[arxiv][online][code][video][press release] - MLNav: Learning to Safely Navigate on Martian Terrains

Shreyansh Daftry, Neil Abcouwer, Tyler Del Sesto, Siddarth Venkatraman, Jialin Song, Lucas Igel, Amos Byon, Ugo Rosolia, Yisong Yue, Masahiro Ono

IEEE Robotics and Automation Letters (RA-L), May 2022

[conference][journal][video] - Preference-Based Learning for Exoskeleton Gait Optimization

Maegan Tucker*, Ellen Novoseller*, Claudia Kann, Yanan Sui, Yisong Yue, Joel Burdick, Aaron D. Ames

International Conference on Robotics and Automation (ICRA), May 2020.

(Best Paper Award)

[pdf][arxiv][demo video][project]

AI for Science: study how AI methods can improve workflows in science and accelerate knowledge discovery; develop methods for automated experiment design and human-intelligible modeling; deploy in real systems.

Selected Publications

-

Population Transformer: Learning Population-level Representations of Neural Activity

Geeling Chau, Christopher Wang, Sabera Talukder, Vighnesh Subramaniam, Saraswati Soedarmadji, Yisong Yue, Boris Katz, Andrei Barbu

International Conference on Learning Representations (ICLR), 2025

(Oral Presentation)

[arxiv] -

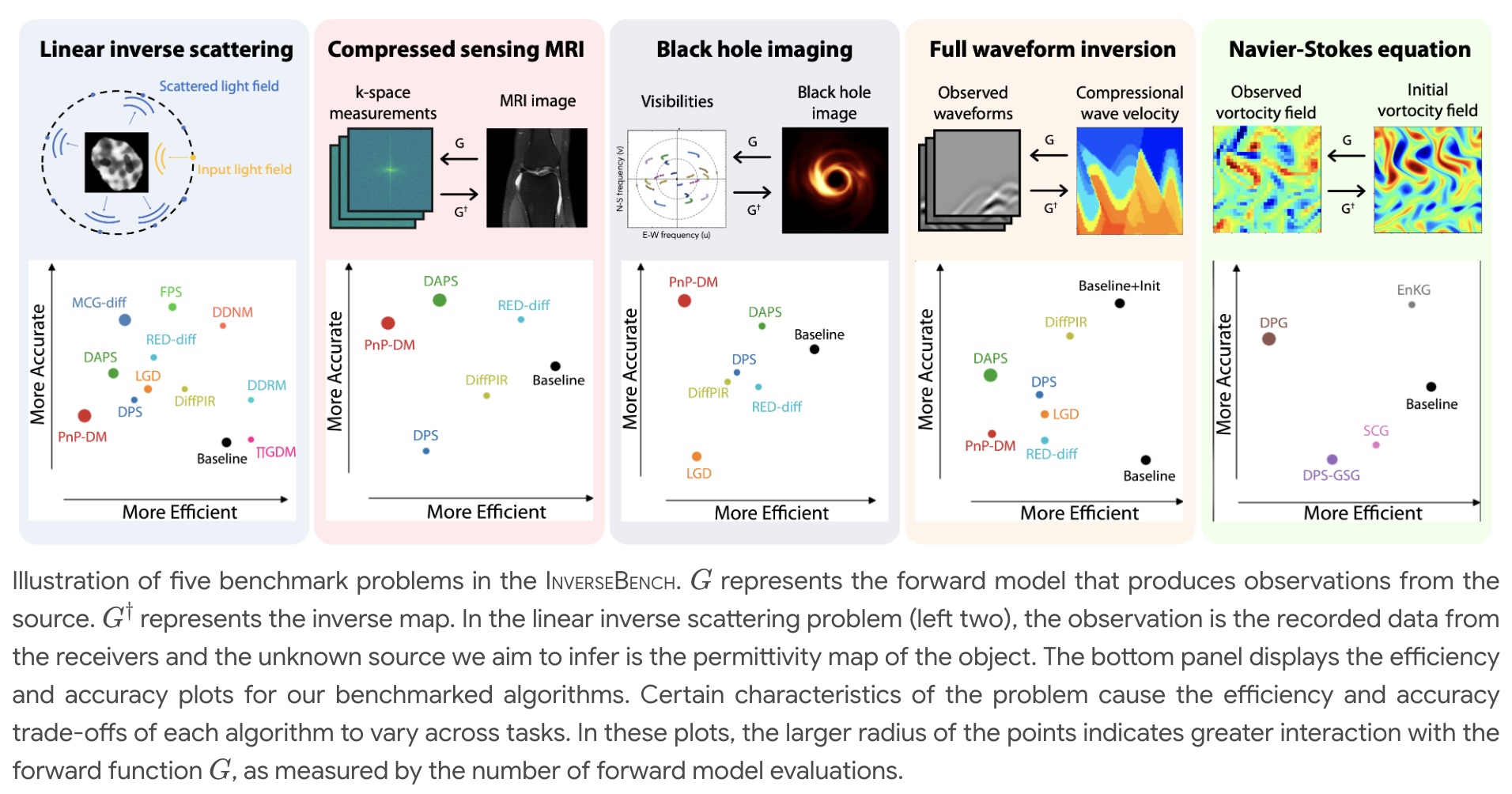

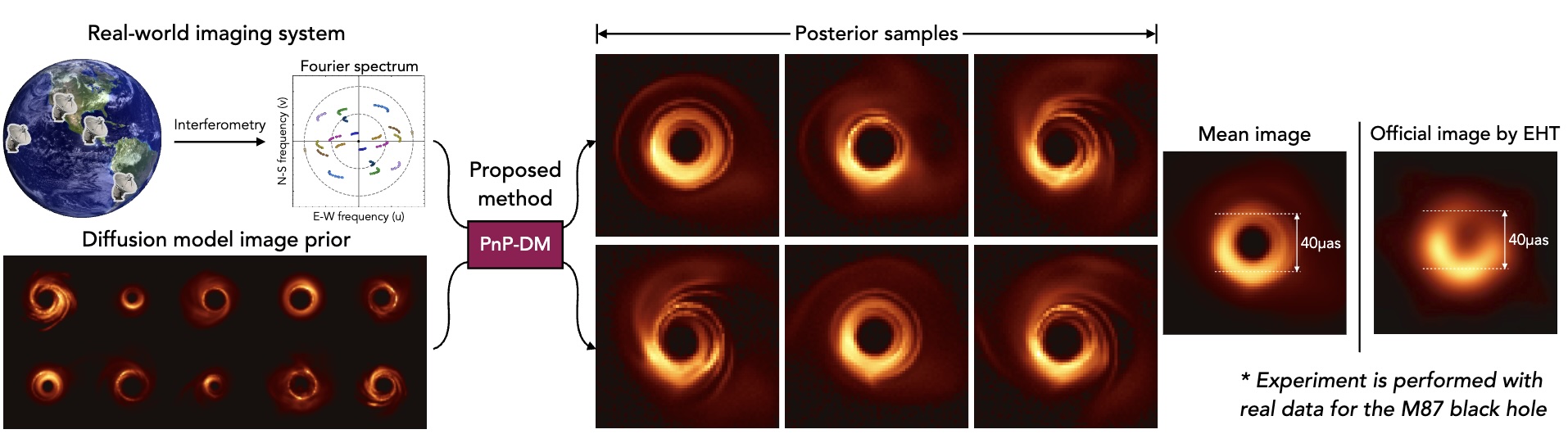

InverseBench: Benchmarking Plug-and-Play Diffusion Models for Inverse Problems in Physical Sciences

Hongkai Zheng, Wenda Chu, Bingliang Zhang, Zihui Wu, Austin Wang, Berthy T. Feng, Caifeng Zou, Yu Sun, Nikola Kovachki, Zachary E. Ross, Katherine L. Bouman, Yisong Yue

International Conference on Learning Representations (ICLR), 2025

(Spotlight)

[project] -

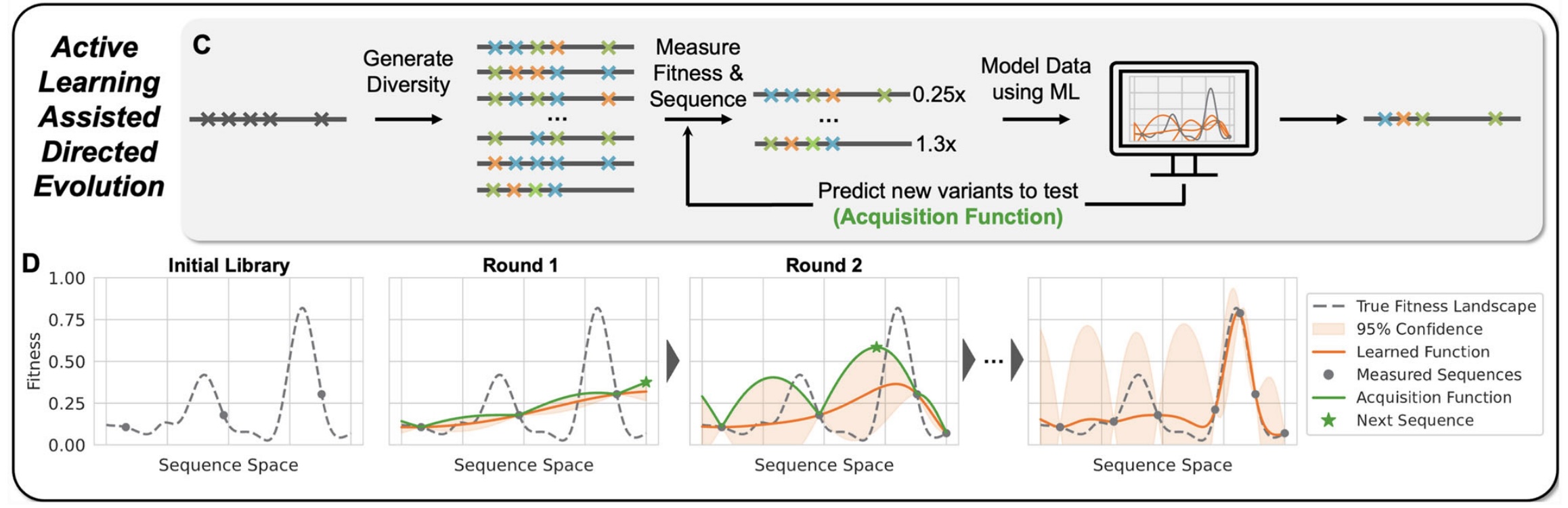

Active Learning-Assisted Directed Evolution

Jason Yang, Ravi G. Lal, James C. Bowden, Raul Astudillo, Mikhail A. Hameedi, Sukhvinder Kaur, Matthew Hill, Yisong Yue, Frances H. Arnold

Nature Communications, 2025

[online][bioRxiv] - Self-Supervised Keypoint Discovery in Behavioral Videos

Jennifer J. Sun*, Serim Ryou*, Roni Goldshmid, Brandon Weissbourd, John Dabiri, David J. Anderson, Ann Kennedy, Yisong Yue, Pietro Perona

IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 2022.

[arxiv] - Task Programming: Learning Data Efficient Behavior Representations

Jennifer J. Sun, Ann Kennedy, Eric Zhan, David J. Anderson, Yisong Yue, Pietro Perona

IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 2021.

(Best Student Paper Award)

[arxiv][code][project]

Core AI/ML Research: study the underlying fundamental questions pertaining practical algorithm design, inspired by real-world applications in science and engineering.

Selected Publications

-

Find Any Part in 3D

Ziqi Ma, Yisong Yue, Georgia Gkioxari

[project][arxiv][demo][code] -

Practical Bayesian Algorithm Execution via Posterior Sampling

Chu Xin Cheng, Raul Astudillo, Thomas Desautels, Yisong Yue

Neural Information Processing Systems (NeurIPS), 2024

[arxiv] -

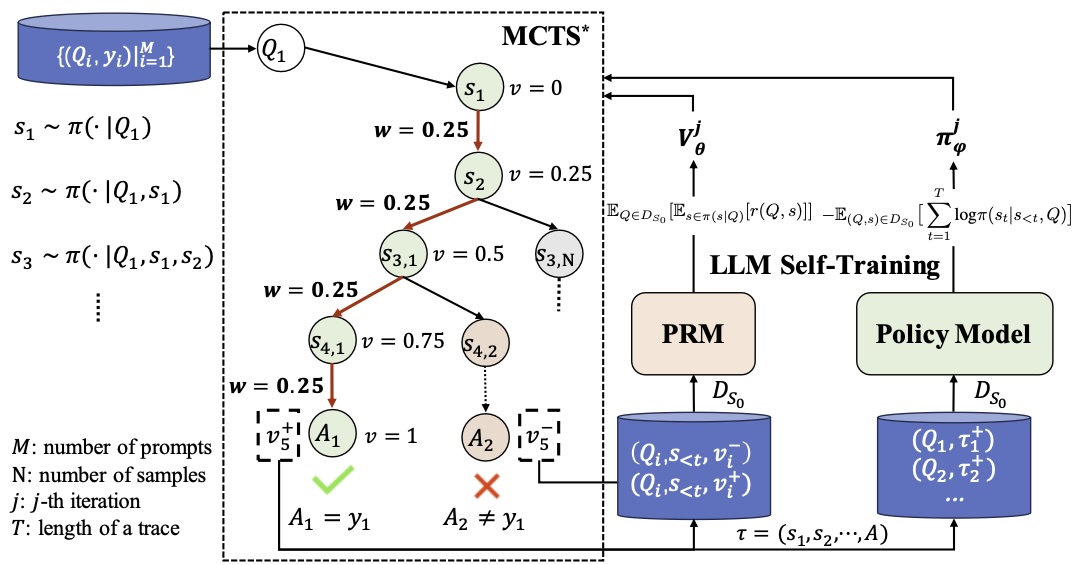

ReST-MCTS*: LLM Self-Training via Process Reward Guided Tree Search

Dan Zhang, Sining Zhoubian, Ziniu Hu, Yisong Yue, Yuxiao Dong, Jie Tang

Neural Information Processing Systems (NeurIPS), 2024

[arxiv] - Online Policy Optimization in Unknown Nonlinear Systems

Yiheng Lin, James A. Preiss, Fengze Xie, Emile Anand, Soon-Jo Chung, Yisong Yue, Adam Wierman

Conference on Learning Theory (COLT), 2024

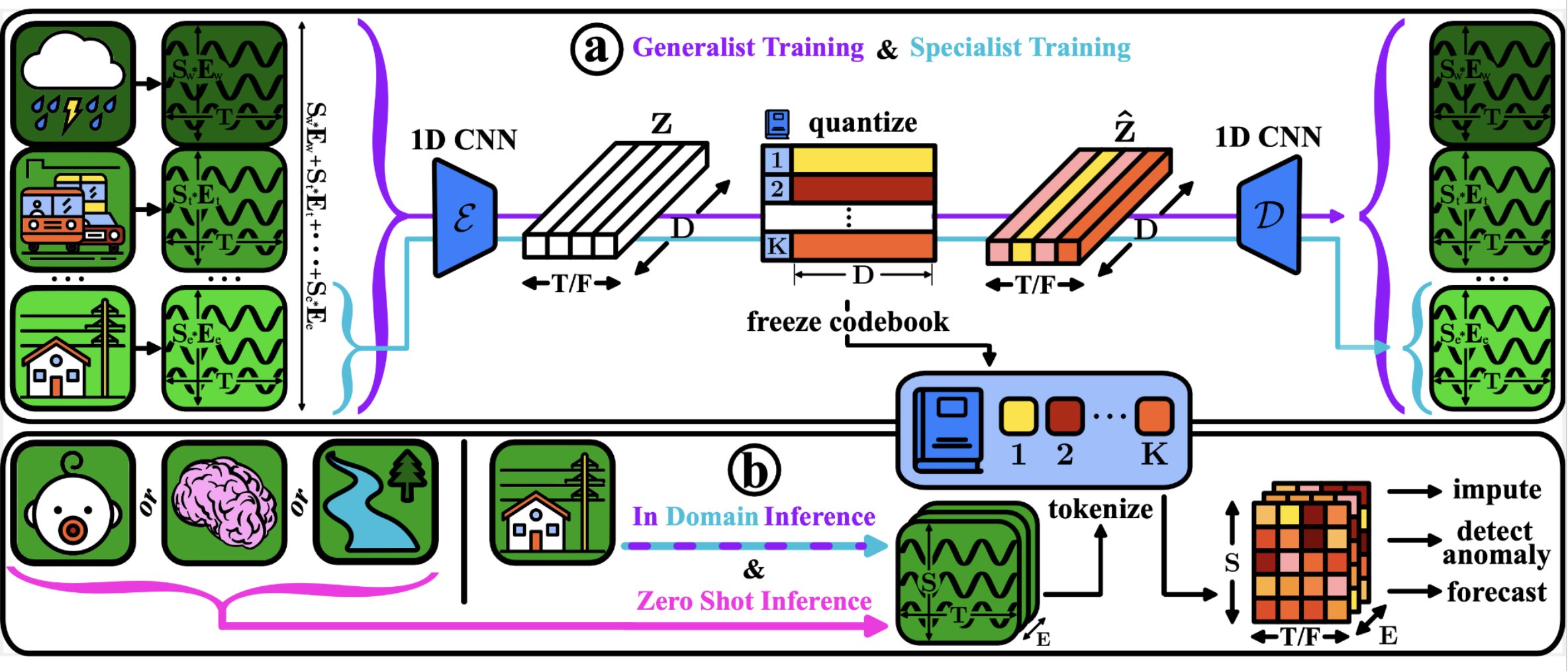

[arxiv] - TOTEM: TOkenized Time Series EMbeddings for General Time Series Analysis

Sabera Talukder, Yisong Yue, Georgia Gkioxari

Transactions of Machine Learning Research (TMLR), 2024

[arxiv][code] - Neurosymbolic Programming

Swarat Chaudhuri, Kevin Ellis, Oleksandr Polozov, Rishabh Singh, Armando Solar-Lezama, Yisong Yue

Foundations and Trends in Programming Languages, Volume 7: No. 3, pages 158-243, December 2021.

[preprint][online] - Batch Policy Learning under Constraints

Hoang M. Le, Cameron Voloshin, Yisong Yue

International Conference on Machine Learning (ICML), June 2019.

(Oral Presentation)

[pdf][arxiv][project]

News & Announcements

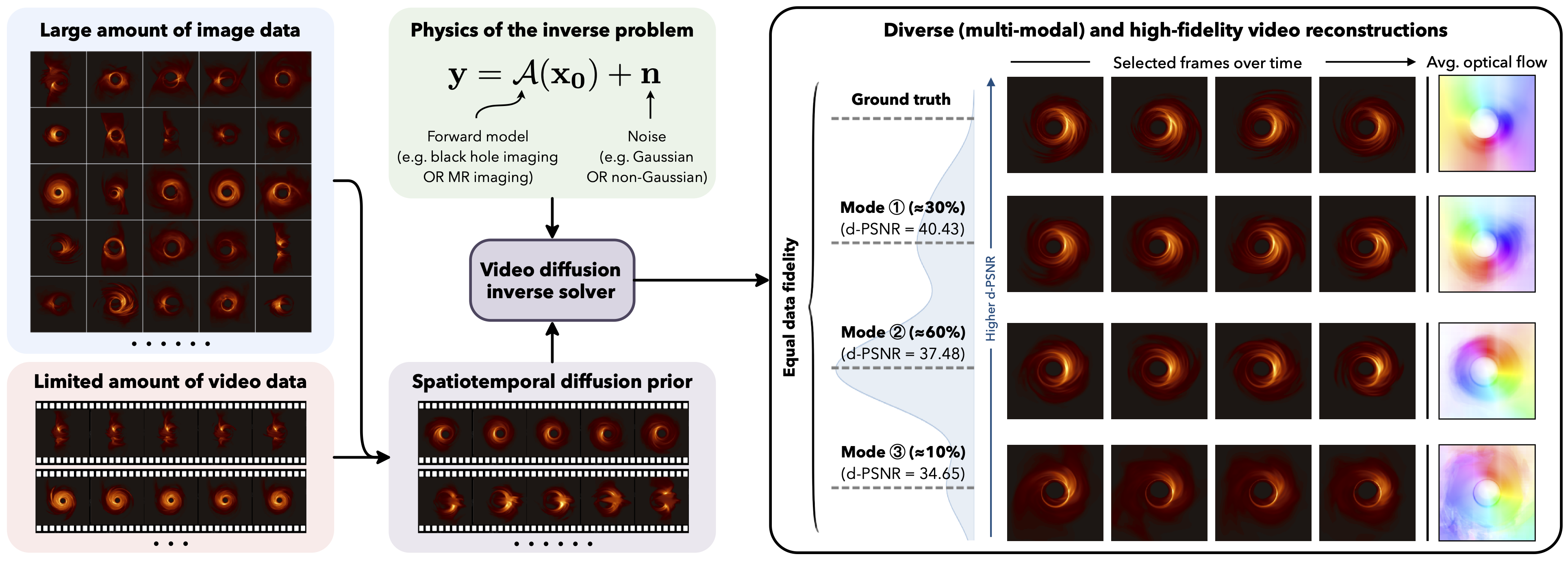

- Video Inverse Problems -- we developed a diffusion model-based

approach for solving video inverse problems.

[arxiv][project][code]

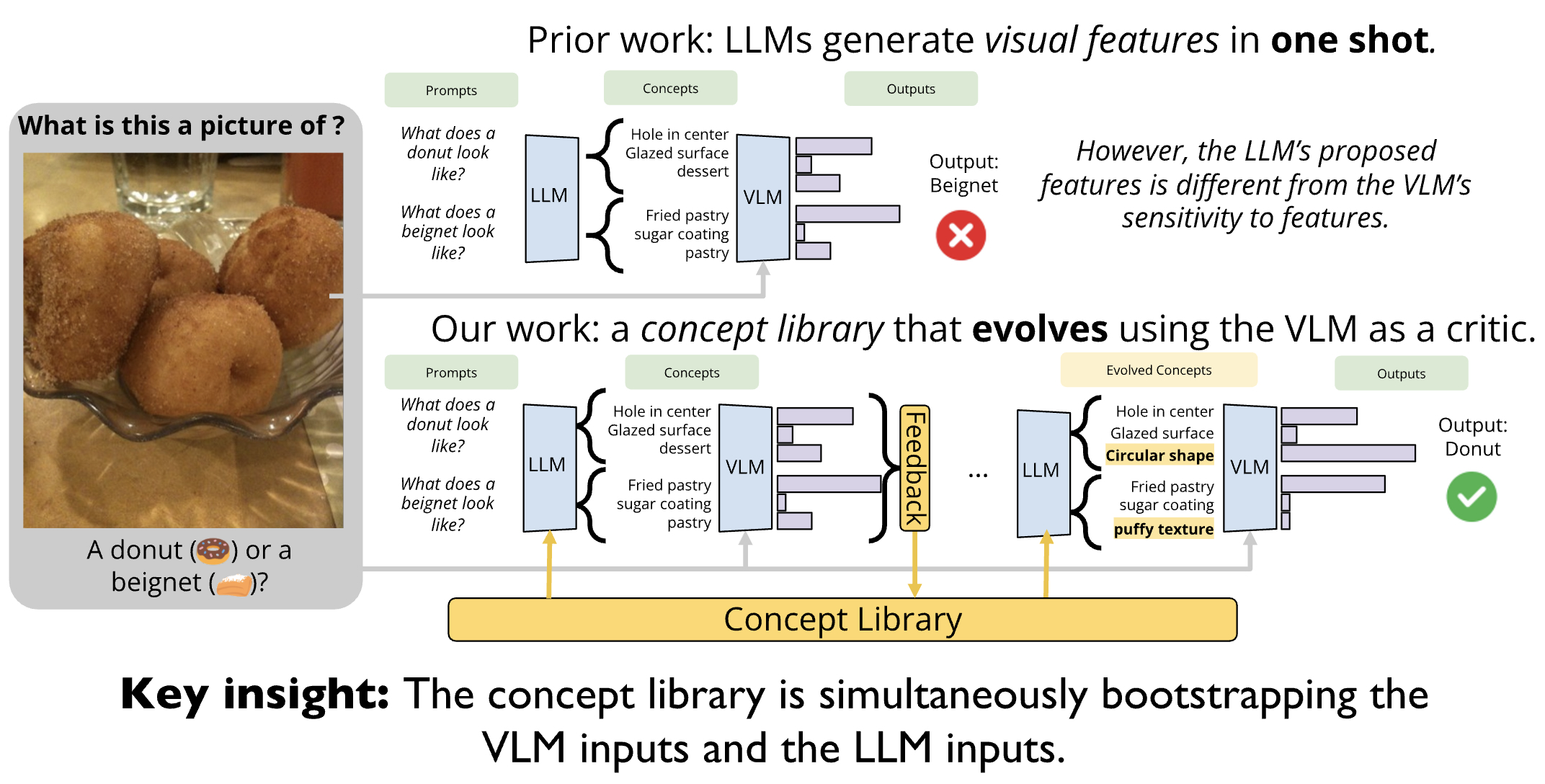

- Self-Evolving Visual Concept Library -- we developed Escher:

Self-Evolving Visual Concept Library using Vision-Language Critic. [project]

- InverseBench -- we developed a benchmarking framework for

plug-and-play diffusion models for inverse problems in physical sciences. [project]

- Active learning-assisted directed evolution -- we developed a new

approach for machine learning to accelerate directed evolution for protein

optimization. Our method is validated in the wet-lab, where we improved the

yield of a desired product of a non-native cyclopropanation reaction from 12%

to 93%.

[online][bioRxiv]

- Find3D -- we

developed an approach for training foundation model for 3D part-level

understanding. The main contribution is a data engine that can generate large

amounts of 3D training data using existing 2D foundation models. [project]

- Self-Training for LLM-based Tree-Search -- we developed a

self-training approach for process-reward learning to empower LLM-based

Tree-Search. [arxiv]

- Plug-and-Play Bayesian Inversion via Diffusion Models & Physics

-- we developed a principled plug-play inverse imaging approach that blends

diffusion model prior and physics-bassed forward models.

[arxiv]

- Humanlike Bot Behavior -- we developed an approach for

generating humanlike bot behavior from demonstrations, demoed in a gym environment within the Fortnite game engine.

[project][paper]

- Farewell! We had five members depart the group during the 2023-2024

Academic Year:

- Lu Gan completed her postdoc and has started as a faculty at Georgia Tech.

- Ziniu Hu completed his postdoc and has started at xAI.

- Yujia Huang completed her Ph.D. and has started at Citadel Securities.

- James Preiss completed his postdoc and has started as a faculty at UC Santa Barbara.

- Kaiyu Yang completed his postdoc and has started at Meta AI.

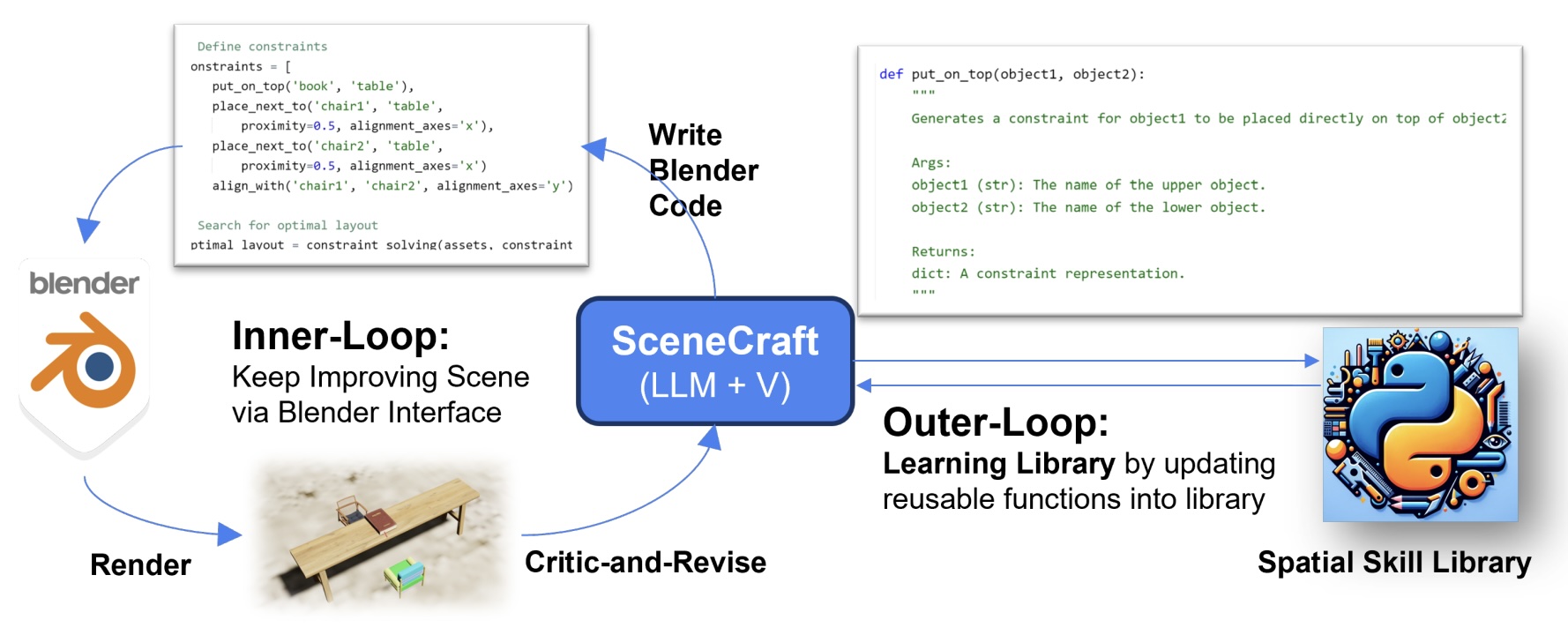

- SceneCraft: An LLM Agent for Synthesizing 3D Scene as Blender Code

-- we developed a Large Language Model (LLM) Agent for converting text

descriptions into Blender-executable Python scripts which render complex

scenes with up to a hundred 3D assets. This process requires complex spatial

planning and arrangemet, which we tackle through a combination of

advanced abstraction, strategic planning, and library learning.

[arxiv]

- Tokenized Time Series Embeddings -- we developed a framework for

learning a tokenization for downstream time series modeling, including

training generalist models that can be applied to many domains.

[arxiv][code]

- Symbolic Music Generation with Non-Differentiable Rule Guided

Diffusion -- we developed a method for guided diffusion using

non-differentiable rules, called Stochastic Control Guidance. Our approach is

inspired by path integral control and can be applied in a plug-and-play way to

any diffusion model. We demonstrate our approach on symbolic music

generation.

[arxiv][website]